When Rebecca Moore received the formal notice in 2006 about a proposed forest thinning initiative, she nearly tossed it out. The letter included an unintelligible black-and-white topographic map of her central California community. It marked the location of the forest and the path that helicopters would take as they hauled logs off the steep hillsides.

Residents of California’s Santa Clara County received a copy of this black-and-white topographic map of areas affected by a logging proposal. (Courtesy of Rebecca Moore/Google Earth Outreach.)

Just before the proposal hit the trash, it captured her curiosity. “I wanted to understand what this map was saying, because I wanted to know if my community was threatened,” said Moore. “We have the largest remaining stand of old-growth redwood trees in Santa Clara County.”

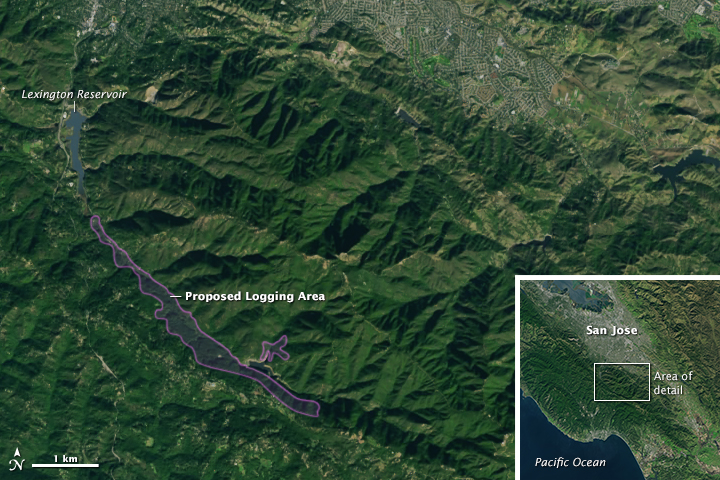

A Google employee, Moore wondered how the map might look in the newly released Google Earth. When she looked at the forest thinning plan on the satellite-based map, it became clear that helicopters would be towing trees right over several high-traffic areas, including a school. That Google map helped persuade the community to stop the thinning initiative.

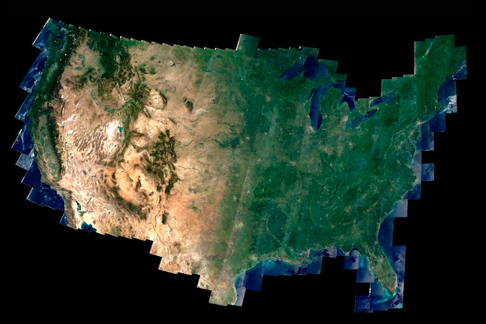

Everyone from scientists to land managers to citizen activists has started turning to the free, open archive of Landsat images to better view their communities and the changes happening to them. This image, acquired by Landsat 8 on December 31, 2014, shows a natural-color view of some of the redwood forests once slated for logging in the black-and-white map above. (NASA Earth Observatory image by Joshua Stevens, using Landsat data from the U.S. Geological Survey)

The experience got Moore thinking: Could Google Earth do more? Would it be possible to combine the satellite data behind Google Earth with the company’s powerful data-sorting algorithms and cloud computing?

“YouTube did a major machine learning (analysis) of the corpus of YouTube videos, and they found that there are a lot of cats,” Moore said. That analysis is just one example of a growing phenomenon known as “big data" in which computers crunch vast quantities of information to identify useful patterns. Moore thought: What patterns would emerge if you applied the big data approach to Earth imagery?

It’s a question that Earth scientists also have been asking. In 2008, the U.S. Geological Survey took 3.6 million images acquired by Landsat satellites and made them free and openly available on the Internet. Dating back to 1972, the images are detailed enough to show the impact of human decisions on the land, and they provide the longest continuous view of Earth’s landscape from space.

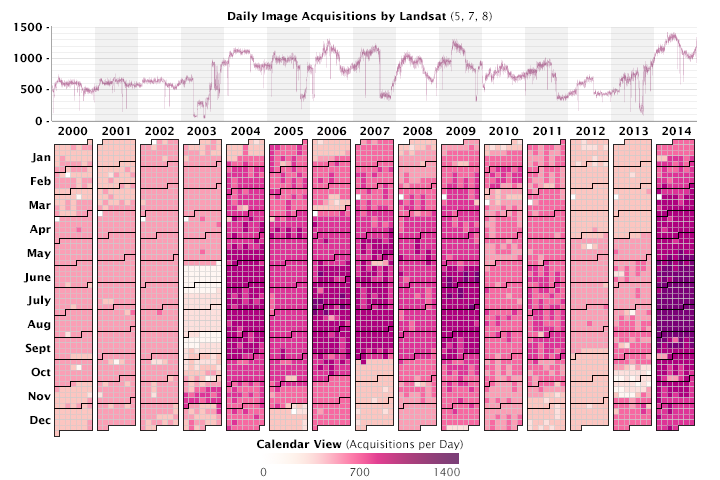

Since January 1, 2000, more than 4.3 million scenes have been captured by Landsat satellites and made available to the public. Landsat 8 was launched in February 2013, significantly boosting the rate to as many as 1,400 images each day. (Graph by Joshua Stevens, using data collected from the U.S. Geological Survey acquisitions archive.)

“With the full Landsat record available, we can finally look at really big problems, like the global carbon cycle,” said Jeff Masek, the Landsat 7 project scientist and a researcher at NASA’s Goddard Space Flight Center. Because carbon dioxide gas amplifies greenhouse warming, understanding how it moves into and out of the atmosphere through the carbon cycle is central to understanding Earth’s climate.

Forests store carbon, and the Landsat series of satellites offers the most consistent, detailed, and global means to measure changes in forest health—both natural and human-caused (such as deforestation). But to get a sense of how much carbon is entering the atmosphere from forests, scientists have to figure out how to sort through petabytes of data.

Evan Brooks, a statistician turned forestry scientist at Virginia Tech University, began his expedition into the Landsat record with a single forest. In western Alabama, row after row of loblolly pine trees grow in tall straight lines. For the Westervelt Company, that forest is a crop. For Brooks, the forest is a proving ground for a new method of understanding Landsat measurements.

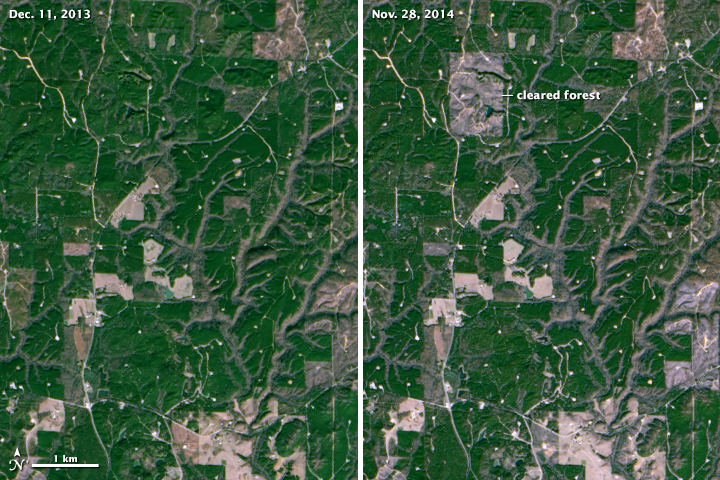

Landsat 8 acquired these two images of forest clearing in northern Alabama. The satellite can readily spot landscape changes down to the size of a baseball diamond. (NASA Earth Observatory image by Joshua Stevens, using Landsat data from the U.S. Geological Survey)

“In the past, if we wanted to look for change, we would find a cloud-free scene and then find another cloud-free scene from the same season in another year,” Brooks said. Before 2008, Earth scientists didn’t try anything more complex because Landsat data cost as much as $600 per scene. Once all of the Landsat data became available free of charge, the statistician in Brooks wondered if he could find a better way to identify change by using the entire record.

“I didn’t want to think about still-lifes anymore,” he said. “I am now treating each Landsat measurement like a frame in a movie. When you start seeing things moving through time, you get a deeper sense of what is happening. If a picture is worth a thousand words, then the movie is worth a thousand pictures.”

To build his movie of change in Westervelt’s forest, Brooks broke each Landsat scene into its smallest elements. Landsat satellites measure reflected and emitted light, both visible and infrared, for each 30-square-meter parcel of land on Earth. This area, about the size of a baseball diamond, makes up the smallest element of a Landsat image: a pixel.

Every pixel in a Landsat image represents a measurement of reflected electromagnetic energy (EM) collected in small portions of the spectrum called "bands." In a true-color scene like the one above, bands 4, 3, and 2 are combined to re-create red, green, and blue as our eyes interpret the visible spectrum. Move the box to see the changing levels of red, green, and blue.

Brooks gathered every Landsat measurement of the Westervelt forest taken between 2009 and 2011 (roughly one image every two weeks) and charted the raw measurements for each pixel as a line graph. A forest, field, or city block changes in predictable ways from year to year—greening in the spring, browning in the winter—so the measured values of light from a particular pixel take a curved shape that is pretty much the same from year to year. “But where we see odd jumps or leaps,” Brooks noted, “we know that some change occurred.”

The Westervelt Company keeps precise records of harvests and thinning and planting. When Brooks began to match his time-series, movie-like image analysis with the company’s records, he found that he could observe even subtle changes. Because even a small change in the landscape alters the quantity and type of light reflected from a pixel, the light-curve approach allowed Brooks to detect changes smaller than a single Landsat pixel.

“Previous Landsat analyses might see the change from a forest to a golf course,” he said. “We can see the removal of 10 percent of a forest stand, well below Landsat’s pixel resolution.” That level of detail is important because many of the changes that Brooks sees are caused by human development and land management.

“The scale of human development is getting finer,” says Randy Wynne, a geographer at Virginia Tech. “In Virginia, the average rural parcel size is under 70 acres, and it’s dropping. Some 85 percent of forests are privately owned in the eastern United States. If you want to see human influence in most of the world’s forests, you need this kind of analysis. Nothing else can get at it.”

Brooks can run his forest analyses on a laptop, but he can only survey a small area. How do you scale up a pixel-based analysis to figure out how forests are changing around the world across four decades?

“The issue is scaling it up,” says Robert Kennedy, a remote sensing scientist at Boston University who has developed a similar analysis tool. “Even if you have a simple algorithm, you need a lot of computing to manage all of the data.”

How much data? “There are roughly 400 billion land pixels in a single global mosaic,” says Rama Nemani, a scientist who runs the NASA Earth Exchange (NEX), a supercomputing collaborative at NASA’s Ames Research Center. With at least one image of every location on Earth per season every year, the entire 43-year Landsat record contains more than 50 trillion pixels.

“How could you handle that on your desktop?” asked Rebecca Moore. “You can’t. This is where cloud computing comes in.”

The processing and storage of data has changed significantly since 1972. Landsat Return Beam Vidicon and Multispectral Scanner data were originally stored on thousands of rolls of 70mm film, as shown in a 1975 photo (top left) of the USGS Earth Resources Observation Systems data center. Four decades later, the storage is digital and mind-numbingly dense. Data silos at EROS (top right) hold thousands of tape cartridges storing up to 8 terabytes apiece. Google also maintains vast forests of servers and processors at its data centers. (Photos courtesy of U.S. Geological Survey and Connie Zhou/Google.)

It is a conclusion that some University of Maryland scientists reached over the course of two decades. Matthew Hansen and Sam Goward are geographers and remote sensing specialists who have been part of a team mapping Earth’s land cover—forests and cities, farms and water—since the mid-1990s.

“We wanted to know the impact of disturbance—harvesting, thinning, fires, storms—things that lead to changes in forests,” said Goward. “Every time you disturb a forest, it restarts the growth cycle, and when you do that, you impact the carbon cycle. Very few forests make it through a full growth cycle because of disturbances, but no one knows the patterns of forest disturbance or how they impact the carbon cycle.”

For years, Goward and Hansen worked with low-resolution data that did not have a lot of detail. But disturbance happens on a small scale, and to see it they needed something like Landsat’s 30-meter resolution. Until 2008, Landsat data were too expensive to consider a global map. “We did the science we could afford, not the science we wanted to do,” Goward said.

Then in 2008, the game started to change. “When the Landsat archive opened up, we mapped forests in Indonesia and European Russia,” Hansen said. “We then knew we could make a global map, but we didn’t have the computing power yet.”

Finally, while attending an international meeting about deforestation and forest disturbance, Hansen was introduced to Google’s Rebecca Moore. He saw an opportunity. “Their computing expertise fit perfectly with our geographic knowledge,” Hansen said. “So we ported our code for mapping forests to the Google system.”

In just a couple of days, Google applied the University of Maryland code to 700,000 Landsat scenes, discarding cloudy pixels and keeping clear pixels. It analyzed the remaining sequence of pixels and assigned a flag to each—was it forested or not? The analysis noted the date that forests were cleared or the date when they had grown-in enough to be counted as forest again. The entire process took one million hours on 10,000 central processing units (CPUs). Moore noted: “The analysis would have taken 15 years on a single computer.”

The resulting map, released in 2013, shows how Earth’s forests changed between 2000 and 2013. “It is the first global assessment of forest change in which you can see the human impact,” said Masek. And the message is: People have had a huge impact on forests.

Hansen and colleagues produced an interactive global forest change map based on Landsat data. In the image above, forest loss in protected regions of Côte d’Ivoire are visualized by the year in which the loss occurred. Click on the map to zoom in or widen the view.

“Less than 1 percent of old-growth forest remains in the United States,” said Hansen. But the real surprise was how quickly tropical forests are disappearing. Brazil has deservedly gotten a lot of credit for reducing their deforestation rate in the past decade, he noted, but forest cover loss has increased so much in other tropical countries that the global rate is soaring.

Such a revelation would not have been possible without the “big data” approach. “In the past, we were confounded by clouds in the tropics,” Hansen said. “Being able to mine the full Landsat archive allowed us to literally see places we haven’t seen before at this resolution. We have cloud-free data built from thousands of inputs over tropical locations like Gabon or Papua New Guinea.”

Apart from revealing patterns and trends in forest cover, the global forest map represents a major shift in the way Earth science is done.

“In the past, I used to bring data to my computer and analyze it,” Masek noted. But the questions have gotten bigger than that type of analysis can sustain. “Now it’s impossible to bring all the data to my computer.” Instead, scientists develop analysis tools and bring them to computation workhorses like Google Earth Engine or NEX. “We can implement our algorithms where the data live. It’s a different way of mining the Landsat archive.”

In 1974, scientists released the first photo mosaic of the 48 contiguous United States. The map was composed from 595 cloud-free black and white images collected by Landsat 1 between July 25 and October 31, 1972. The printed image was 10 feet by 16 feet, with a scale of one inch on the map equal to one million inches on the ground.

The Web-enabled Landsat Data (WELD) project now creates cloudless mosaics of the United States annually using computer automation and 40 years of calibration data. Each pixel in the image represents 30 meters (100 feet) on the ground.

Until 2008, only 4 percent of the Landsat archive had even been examined; since the opening of the archives, the big data approach is allowing scientists to dig deeper into all of the data. This allows them to make connections they couldn’t make before.

“You can look at changes over time and you can see how one process affects another,” said Kennedy. “We are now able to ask questions about where, when, and why two processes interact.”

Kennedy has started to map biomass yearly. “We can quantify how much carbon is lost every year due to fire or clearing,” he said. And for the first time, he can ask questions like: Is the system responding differently now than it has in the past? Are we losing more carbon to fires? To insects? Are forests growing back more quickly? “We can track the full trajectory of a forest over the years.”

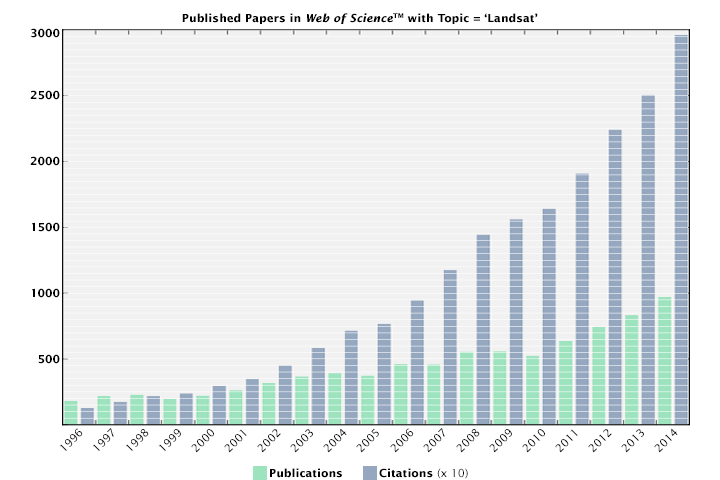

Just as the data grow, publications and citations grow too. The number of peer-reviewed scientific articles using Landsat has risen sharply in the past two decades. The Web of Science includes over 15,000 publications on topics that include Landsat data.

And the scale and scope can grow even wider. For instance, knowing how forests have changed leads to other questions about global change. “How much carbon is going into the atmosphere through forest clearing and management? How are ecosystems changing because of climate change? What are the vegetation patterns of the planet going to look like in 200 to 300 years?” Masek asked. “Landsat gives a 40-year synopsis of what has happened, and that not only lets us see how the forests are changing now, but it could help us understand how life on Earth will change in the future.”

“We are doing statistics on the planet,” says Moore. “I’m really curious to see what people find in all of this satellite data.”